Making Google Analytics and jQuery Mobile Work Together

Posted: October 20, 2012 Filed under: mobile, webdev | Tags: google analytics, jquery Leave a commentThe jQuery Mobile framework is excellent for many things, but its single page architecture can be hard to track through analytics packages. This post will show you how to make some tweaks to your Google Analytics insert code to help it gather statistics from jQuery Mobile.

A little more background on why this is necessary… Within jQuery Mobile each page view is just a specific snippet of HTML on a single HTML page pulled into the browser view by Javascript. Most analytics packages treat these visits as a single page load and your page view statistics end up looking like, well, not much as the only page recorded is the source page – index.html. Not very helpful in terms of analysis… Google Analytics requires a few tweaks to be able to record these Javascript-generated page views. Here are the steps to make that happen.

Step 1: Include the asynchronous Google Analytics script loader between the <head> tags in your jQuery Mobile index page.

<script type="text/javascript">

var _gaq = _gaq || [];

_gaq.push(['_setAccount', 'UA-XXXXXXXX-XX']);

_gaq.push(['_gat._anonymizeIp']);

(function() {

var ga = document.createElement('script'); ga.type = 'text/javascript'; ga.async = true;

ga.src = ('https:' == document.location.protocol ? 'https://ssl' : 'http://www') + '.google-analytics.com/ga.js';

var s = document.getElementsByTagName('script')[0];

s.parentNode.insertBefore(ga, s);

})();

</script>

All we have done here is bring the Google Analytics Javascript tracking code onto the page. You will need to specify the Google Profile Account ID you received when setting up your Google Analytics web property to identify the web site/app you want to track. (Add that info to the “UA-XXXXXXXX-XX” section above.) We included the asynchronous loader method as it lets other parts of the page load as connections become available and helps with performance over spotty mobile networks (or dodgy networks anywhere…).

Step 2: Using the jQuery base library script and its native functions, add a “track page views” event right before the closing of the <body> tag in your jQuery Mobile index page.

<script type="text/javascript">

$('[data-role=page]').on('pageshow', function (event, ui) {

try {

_gaq.push( ['_trackPageview', event.target.id] );

console.log(event.target.id);

} catch(err) {

}

});

</script>

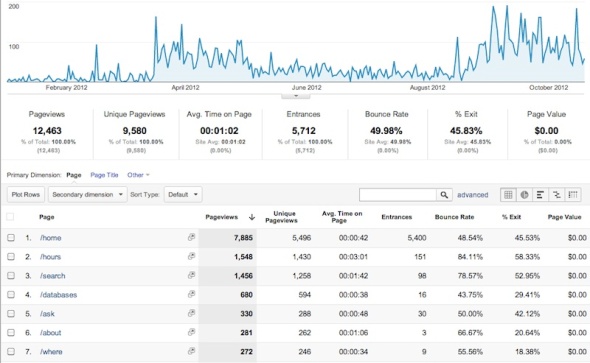

In the routine above, we set up the “pageshow” event to make a note of any action on the page and we are binding that event to the Google Analytics _trackPageview() method on every page load to allow for a recording of any live action on the page. With the line – “_gaq.push( [‘_trackPageview’, event.target.id] );” – we are telling Google Analytics to record the specific page id in the HTML into our analytics data. With these changes, the logs start to look more familiar and each snippet of HTML that has been visited will be part of the log record.

[Figure 1: Screenshot showing Google Analytics recording each page view in a jQuery Mobile app]

A final note: Under this method of tracking, the way you identify your individual pages within jQuery Mobile is important. Use markup and names that will make sense when you see them in your Google Analytics logs (or any logs for that matter…). Some sample markup:

<div data-role="page" id="search" data-theme="d">

Because the page id has been set up intuitively, I will be able to look for “search” in my Google Analytics reports and get specific tracking data about that particular “page”.

To see a full HTML example with all of the above code in context, visit http://www.lib.montana.edu/~jason/files/touch-jquery/ and “view source”.

Mapping your location with Google Static Maps API (tutorial)

Posted: March 18, 2011 Filed under: code/files, libraries, webdev | Tags: geo, google maps 5 CommentsThe Google Static Maps API (code.google.com/apis/maps/documentation/staticmaps/) allows for a dead simple way to embed maps showing a specific location for mobile and desktop environments. The real advantage of the Google Static Maps API is that the embed comes in the form of an HTML image <img> tag. In this quick codelab tutorial, I’m going to show you how to create a static map and set a location using the Google Static Maps API. If you have a familiarity with HTML, the markup for the image should be familiar.

<img src=”http://maps.google.com/maps/api/staticmap?center=Bozeman,MT&zoom=13&size=310×310&markers=color:blue|45.666671,-111.04859&mobile=true&sensor=false” />

As I mentioned earlier, it is a simple <img> tag with a call linking into the Google Static Maps API. In order to assign a specific location we need to pass a few parameters to the Google Static Maps API. Included among the customizations:

- A central location for the map

- A zoom level for the map

- A size for our map image

- A marker color and position using latitude and longitude

- Set the map to display for mobile settings without a sensor

The necessary pieces to enter are a central location and your specific latitude and longitude. Enter your hometown for the central location in the format of {city, state or principality}. To get your latitude and longitude, visit gmaps-samples-v3.googlecode.com/svn/trunk/geocoder/getlatlng.html and type in your library address. Copy the latitude and longitude values that are returned. Enter these position values as a comma separated string {latitude,longitude}.

Here’s the image src= markup with cues for where to add your values.

http://maps.google.com/maps/api/staticmap?center={YOURHOMETOWN}&zoom=13&size=310×310&markers=color:blue|{YOUR LATITUDE/LONGITUDE}&mobile=true&sensor=false

Once you have the link filled out add it to your image <img> tag on a live HTML page and you will have a local map to your library or any other specific location.

Unix Global Find and Replace (using find, grep, and sed)

Posted: April 23, 2008 Filed under: code/files, webdev | Tags: unix 5 CommentsFinding and replacing strings and characters can be a dicey operation for a web developer. Too much can lead to breaking your web site. Too little can lead to missing that piece of HTML needing to be updated or deleted. Many tools exist that can help you in the process – Dreamweaver, Homesite, and many text editors have powerful interfaces for searching and replacing. (E.g., Use cntrl-f on windows or cmd-f on mac to see the Find and Replace window in Dreamweaver.) But there’s some really great functionality on the command line for Unix/Linux users that shouldn’t be overlooked. I’ve been experimenting with a procedure for making these global matches and replaces within the Unix shell environment and I wanted to document the process somewhere. This seems like as good a place as any…

Important: All the commands below must be run from the shell environment on a Unix or Linux system. If you aren’t sure what I’m talking about, check the wikipedia reference for shell.

Step 1: Find the pattern needing to be replaced or updated, print out files needing change

find . -exec grep ‘ENTER STRING OR TEXT TO SEARCH FOR’ ‘{}’ \; -print

*Note: I’m using the “find” and “grep” commands to search for a matching pattern which will print out a list of files and directories that need changes. If I’m at the top level of my web site the “.” in the find command will search for the pattern down through any directories below. On a large site, the process can take some time.

Step 2: Move/copy files into test directory to test expression; preserve owners, groups, timestamps

cp -p -r test test-backup

*Note: These directories would be named according to the directories or files you need to change based on the results from Step 1. The “-p” will preserve owners groups and timestamps in the copied directory. The “-r” will copy recursively down through any associated sub-directories. I do this so I can compare the new directory to the original directory after I’ve test run the global changes.

Step 3: Run test on find and replace expression in /test-backup/ directory

find . \( -name “*.php” -or -name “*.html” \) | xargs grep -l ‘ENTER STRING OR TEXT TO SEARCH FOR’ | xargs sed -i -e ‘s/ENTER OLD STRING OR TEXT TO REPLACE/ENTER REPLACEMENT STRING OR TEXT/g

*Note: I’m using the “find .” command to search for .php and html files in the current working directory as I only want to target the files that need to be touched (you should change according to your requirements), next I’m piping that result to the “grep” command which searches for the string or text specified and holds only the matched files in memory, and finally I’m passing the grep result to the “sed” command which matches the string or text and replaces it with the new string or text value.

Step 4: Test files and applications to ensure changes didn’t break functionality and that owners, groups, timestamps were preserved

Step 5: After testing, run expression from Step 3 in ALL the directories or files needing the changes. Delete /test-backup/ directory

Steps 1 and 3 are the heart of the matter. I’m learning the power of these commands, so I’m pretty cautious about backing up and testing on directories and files that aren’t live. Once I have the expression dialed in, I’ll run it on a more global scale. So, there you have it – my find and replace process in a nutshell. Use at your own discretion and feel free to share your thoughts in the comments.

Ajax Workshop – Internet Librarian 2007

Posted: October 29, 2007 Filed under: code/files, conferences/events, education, il2007, webdev Leave a commentMy preconference,”AJAX for Libraries”, with Karen Coombs went really well. It’s always great working with Karen. She’s cool, composed, and “wicked” knowledgeable. For the second year in a row, we had a great group of participants. It’s nice to see a growing interest in emerging web programming frameworks and how they might be applied to libraries.

Here are the updated slides and links:

“AJAX for Libraries” Presentation

“AJAX for Libraries” Handout (Code Samples and Explanations)

“AJAX for Libraries” Code Downloads

And some additional examples of libraries using AJAX:

What are your patrons doing online?

Posted: June 13, 2007 Filed under: libraries, webdev Leave a commentBusinessWeek has a quick snapshot of U.S. user activities online broken down by task and ages. (The study was conducted by the Forrester Research group.)

Source: http://www.businessweek.com/magazine/content/07_24/b4038405.htm.

Good news if you are into the web2.o stuff and you work in college libraries. The 18-21 set and 22-26 set are well represented in most task categories. And how about those task categories: Creator, Collector, Spectator, Joiner, Critic, etc. They help to define the possible tasks of web2.0 users. Granted the activities recorded refer to patterns in the web at large, but it gives libraries some guidance as to what our users are doing online. It’s also interesting to note the “Inactives”. There’s a whole population that doesn’t live and breathe the web. I get caught up in the “everybody’s online” thing, so it’s a nice gentle reminder.

The key is honing in on one or two likely applications for your library community and giving them a go. Want to enable the Collectors? Think about an XML feed to pieces of your digital collections. Or what about building a service for the Critics? Build a tagging or a comment/rating system into the catalog or digital collection.

An admission: it’s all fine and good for the student set, but college libraries have other interested parties like faculty and university admin. Part of the library role might be to “coach” these other parties into possible roles they might take up in their web use. A faculty member trying to build a research bibliography seems like the perfect candidate to become a “Collector” once given the right library tool or app. In this setting, outreach and education are still a library web developer’s best friend.

“TERRA: The Nature of our World” gets Webby Nomination

Posted: April 25, 2007 Filed under: conferences/events, webdev Leave a commentFor the second time in as many months, “TERRA: The Nature of our World” (http://lifeonterra.com) has been noticed by the web critics. This time it’s for the Webbys which have been called “the Oscars of the Internet” and with judges like Beck and David Bowie it’s definitely got some celeb street cred. (The full MSU news story is available at http://www.montana.edu/cpa/news/nwview.php?article=4822.)

Once again, anyone has the opportunity to vote. TERRA’s nomination can be found in the student category of the Online Film and Video section at http://pv.webbyawards.com. Votes will be accepted through Friday, April 27 and winners announced May 1.

But the voting is not really the point of the post… I love that digital library initiatives was a cornerstone in this effort. There’s an opportunity here for all diginit folk. Find your niche as a content manager and provider. My “in” was metadata, creating XML for syndication, relational database design, a little PHP magic, and creating a search backend. The key was answering the need for a content manager and developing relationships towards that end. Think about what could be your “in”.

“TERRA: The Nature of our World” gets South by Southwest (SXSW) Nomination

Posted: February 28, 2007 Filed under: conferences/events, webdev 1 CommentI’ve mentioned the TERRA group project in previous posts. Earlier this month, I received some great news regarding the project. “TERRA: The Nature of our World” was nominated in the student/university website category by SXSW interactive Web Awards.

TERRA is a partnership between Montana PBS, The Media/Theatre Arts Department at Montana State University, Montana State University libraries, and various independent filmmakers. Montana State University libraries was brought in to build/code the site and content management (metadata, data preservation architecture…) The site was designed with a nod to the future of digital libraries. It’s a digital video library with commenting, ratings, tags AND a controlled vocabulary. And it’s all wrapped up with some AJAX functionality and a Dublin Core/OAI metadata backend. The TERRA group also experimented with syndicating our content as podcasts. You can actually search iTunes for TERRA and receive our podcasts. That’s powerful stuff and the reach of the site has been amazing. Just last week, TERRA podcasts were placed as default content in the download for democracy player, effectively doubling the TERRA audience in one fell swoop. More and more, I see leveraging these type of communities as the future of library content distribution.

As for the nomination, I am honored and just a little surprised. SXSW is the center of the web geek world and to even be considered is quite humbling. We’ve got some university press lately, but I didn’t see it going a lot further. (Check the MSU news release for complete details.) So, I’m heading to Austin, TX with a colleague in March. I’m excited to step off the library circuit and see how the other half lives. Stay tuned for SXSW updates.

And if the mood should strike you, vote for “TERRA: The Nature of our World” in the People’s Choice Award race at https://secure.sxsw.com/peoples_choice/.

S3 (Simple Storage Service) – Amazon and Libraries

Posted: January 10, 2007 Filed under: libraries, webdev Leave a commentHave you heard of Amazon’s s3 (Simple Storage Service)? From the site:

Amazon S3 is “storage for the Internet” with a simple Web services interface that can be used to store and retrieve any amount of data, at any time, from anywhere on the Web.

It’s one of Amazon’s newer web services. At .15 cents/gig of storage, it’s a pretty cheap option. Caveat emptor: S3 is intended for developers as an option for storage that can be queried with SOAP and REST web services, so they also get you for network traffic at .25 cents/gig. I wasn’t able to find anything in the fine print about checksum routines and the integrity of the objects, but I’m assuming backups and error checking are part of the Amazon routine. (Update from the horse’s mouth: found this thread in the forums which talks about Amazon’s data protection routines. It’s reassuring…)

Can the library use this? I think so. Even with the mentioned caveats, in the end you are looking at taking the server management side out of the equation. That’s pretty liberating for the small digital shops that our libraries are. At work, we’re experimenting with using the service to store some of our master digitization objects. I mentioned that this was an experiment, right? We’ve got some objects on the S3 servers and are looking into building a web interface that will allow our Special Collections staff to pull down master files when they receive requests from patrons. We’re also working with a campus entity to store media files on S3 and then building a search interface to query S3 for the data. It’s all a work in progress, but something to consider. I can tell you that my library and university will never have the infrastructure or access to a network cloud like Amazon’s. That’s not a knock; them’s just the facts.

(Sidebar: If you’re interested in web services, think about browsing around the Amazon Web Services Developer Connection. Lots of code examples, “howtos” and discussion to get you thinking about web service applications. Don’t be afraid to get you hands dirty and make some mistakes. It’s the only way to learn.)

Anatomy of a Function

Posted: November 30, 2006 Filed under: code/files, php, webdev Leave a commentIt’s been a little while since my last post. My recent work schedule and Turkey Day played a part in that. I’ve been working .com hours on a super cool project. (I mentioned the TERRA group in an earlier post.) I’ve learned so much in the last couple of weeks. It’s amazing what a hard deadline and a shifting set of requirements will do to your web programming skills. All the hard work is about to bear fruit as as the new TERRA web site is about to go live. Have a look at a different kind of digital library.

But, that’s not the point of this post… I wanted to share a little code that made some data conversion very simple over the course of the TERRA project. It’s a simple little php function that converts a MySQL timestamp into an RFC 822 date format (For the project, we stored the item update fields as timestamps and then converted them when we generated our various XML feeds. RFC 822 or RFC 2822 are necessary for valid feeds.) Here’s the php function in all its glory:

//function converts mysql timestamp into rfc 822 date

function dateConvertTimestamp($mysqlDate)

{

$rawdate=strtotime($mysqlDate);

if ($rawdate == -1) {

$convertedDate = ‘conversion failed’;

} else {

$convertedDate = date(‘D, d M Y h:i:s T’,$rawdate);

return $convertedDate;

}

}

//end dateConvertTimestamp

You call the function by including it on the page and using the following code:

$newPubdate = dateConvert(“$stringToConvert”);

echo $newPubdate;

Where $stringToConvert would be any MySQL timestamp value that needs conversion.

In the end a string like this “2005-05-17 12:00:00” looks something like this “Tue, 17 May 2005 12:00:00 EST”. You could also reverse the conversion using this php function:

//function converts rfc 822 date into mysql timestamp

function dateConvert($rssDate)

{

$rawdate=strtotime($rssDate);

if ($rawdate == -1) {

$convertedDate = ‘conversion failed’;

} else {

$convertedDate = date(‘Y-m-d h:i:s’,$rawdate);

return $convertedDate;

}

}

//end dateConvert

NOTE: If/when you copy and paste the above code, make sure all ” (double quotes) and ‘ (single quotes) are retyped. WordPress is doing a number on the proper format.

I just wanted to share the wealth a bit. If you’ve got questions or suggestions, don’t be shy about dropping a comment. I’ll be home in Wisconsin for the next several days, but I’ll have limited internet access there. I’ll try to answer questions if they arise.

Dueling Ajax – couple of articles

Posted: November 14, 2006 Filed under: publications, webdev 1 CommentI’ve been a bit of the Ajax poster boy lately. Two pieces that I wrote for library audiences have just been published.

“Building an Ajax (Asynchronous JavaScript and XML) Application from Scratch.” Computers in Libraries 26, no. 10 (November/December 2006).

“Ajax (Asynchronous JavaScript and XML): This Isn’t the Web I’m Used To.” Online 30, no. 6 (November/December 2006)

uri: http://www.infotoday.com/Online/nov06/Clark.shtml

Both articles fall into the “introductory” mode, although the CIL article walks you through a proof of concept Ajax page update script (mentioned in an earlier post…). I want to be clear: I’m not an Ajax evangelist. I find the suite of technologies that make Ajax go intriguing and the improvements that the Ajax framework can make to some library applications are worth learning about and applying. I tried to point out the good and the bad. Although, it is a four letter word…

I did want to mention a couple of books that were really helpful in getting me up to speed with the Ajax method.

Ajax in Action by Dave Crane, Eric Pascarello and Darren James

DHTML Utopia Modern Web Design Using JavaScript & DOM by Stuart Langridge

(Click on the book covers if you are into book learnin’ and want to browse the Amazon records.) Dig in and discover (or rediscover) some of the possibilities when you put Javascript to work in your apps.